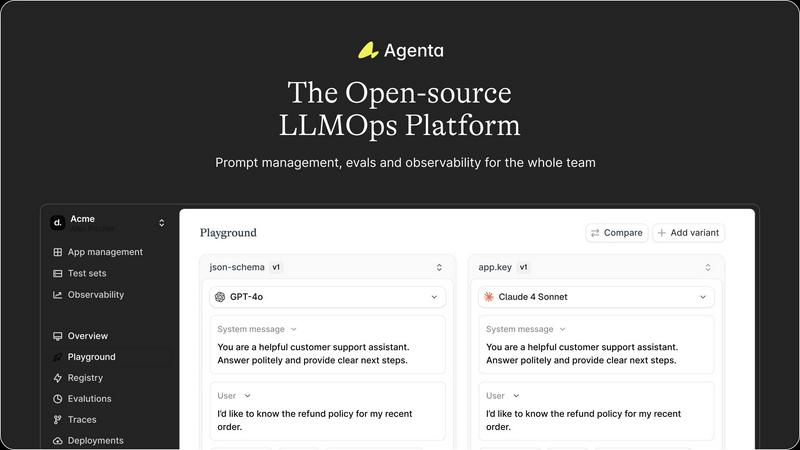

Agenta

Agenta is your all-in-one open-source LLMOps platform for creating reliable AI apps with seamless collaboration and e...

Visit

About Agenta

Agenta is an innovative open-source LLMOps platform designed to empower AI teams in developing and deploying reliable large language model (LLM) applications. It bridges the gap between developers and subject matter experts, promoting collaboration and streamlining the experimentation process. By providing a centralized hub for prompt management, automated evaluations, and observability, Agenta addresses the challenges of unpredictability in LLMs, which often lead to disjointed workflows and ineffective debugging methods. With Agenta, teams can seamlessly track version history, test and compare prompts, and monitor production systems in real-time. This structured approach not only enhances productivity but also ensures that AI applications are built with confidence, facilitating faster deployment and more robust performance.

Features of Agenta

Centralized Workflow Management

Agenta centralizes all aspects of LLM development, allowing teams to keep prompts, evaluations, and traces in a single platform. This eliminates the chaos of scattered workflows and fosters a collaborative environment where all team members can access and manage key resources efficiently.

Unified Playground for Experimentation

The unified playground feature enables teams to compare prompts and models side-by-side in a controlled environment. Users can also track complete version history, ensuring that all changes are documented and reversible, thus facilitating effective experimentation.

Automated Evaluation Tools

With Agenta, teams can replace guesswork with systematic, automated evaluations. The platform allows integration of various evaluators, including LLM-as-a-judge, built-in evaluators, or custom code, ensuring that every change is validated based on evidence rather than intuition.

Observability and Debugging Capabilities

Agenta provides comprehensive observability tools that allow teams to trace every request made to their LLM applications. This feature enables users to identify failure points quickly and turn any trace into a test with just one click, significantly simplifying the debugging process.

Use Cases of Agenta

Collaborative LLM Development

Agenta facilitates collaboration between product managers, developers, and domain experts, enabling them to experiment, compare, and debug prompts collectively. This ensures that everyone is aligned and can contribute to building a more reliable LLM application.

Efficient Prompt Management

By centralizing prompt management, Agenta allows teams to organize their prompts systematically, making it easier to track changes, compare performances, and ensure that all variations are accounted for in the development process.

Streamlined Evaluation Processes

Teams can utilize Agenta to create a structured evaluation process that integrates feedback from domain experts and automated assessments. This ensures that every iteration of the model is rigorously tested and validated, leading to improved outcomes.

Real-Time Monitoring and Feedback

With Agenta's observability tools, teams can monitor their production systems in real-time. This capability allows for immediate detection of regressions and performance issues, enabling prompt adjustments based on user feedback and system behavior.

Frequently Asked Questions

What makes Agenta different from other LLM platforms?

Agenta stands out as an open-source LLMOps platform that centralizes the development workflow, promotes collaboration among team members, and provides robust tools for evaluation and observability, making it easier to build reliable LLM applications.

Is Agenta suitable for teams of all sizes?

Yes, Agenta is designed to cater to AI teams of all sizes, from startups to large enterprises. Its features support collaboration and streamline the development process, making it an ideal choice for any team working with LLMs.

Can I integrate Agenta with my existing tools?

Absolutely! Agenta seamlessly integrates with popular frameworks and models such as LangChain, LlamaIndex, and OpenAI, allowing teams to incorporate it into their existing tech stack without disruption.

How does Agenta enhance the debugging process?

Agenta dramatically simplifies debugging by providing comprehensive observability tools that trace requests and identify failure points. This allows teams to quickly pinpoint issues and resolve them, minimizing downtime and improving overall application reliability.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs